Quantum computing

Quantum computing, the terrain where the boundaries of classical physics dissolve into the bizarre world of quantum mechanics, is a field that fascinates not only by its scientific complexity but also by its disruptive potential. Since the first theoretical considerations by physicists such as Richard Feynman and David Deutsch in the 1980s, quantum computing has developed from a futuristic fantasy into one of the hottest fields of applied research.

From the beginnings to the present day

Quantum computing is based on the use of quantum states such as superposition and entanglement to perform computations. The idea that a quantum system can assume several states simultaneously opened up completely new possibilities in the field of computing. While a classical computer works with bits that assume either the state 0 or 1, quantum computers use qubits that can be 0 and 1 simultaneously, which leads to exponentially faster computing speeds.

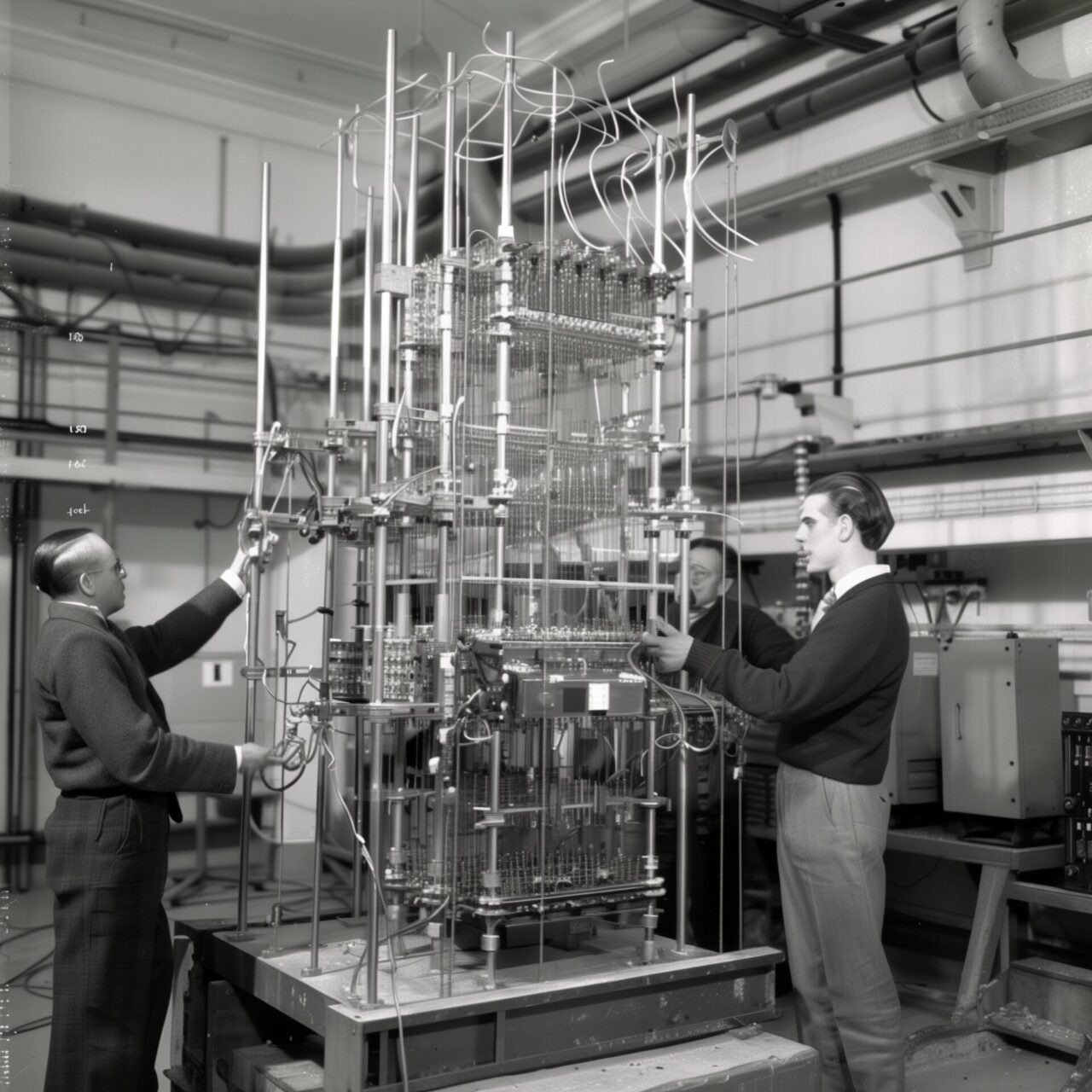

The first experimental breakthroughs in the 1990s, including the development of quantum algorithms such as Shor’s algorithm for factoring large numbers, showed the incredible potential of this technology. Companies such as IBM, Google and smaller start-ups such as D-Wave Systems began to invest in the development of practical quantum computers.

Challenges and setbacks

Despite the progress, the field remains full of technical challenges. Quantum states are extremely susceptible to interference from their environment, a phenomenon known as “decoherence”. Stabilising these states in a way that is necessary for large-scale, reliable calculations has proven to be one of the biggest hurdles.

Moreover, the complexity of quantum mechanics has led to misunderstandings and exaggerations in popular media and even in the scientific community. The portrayal of quantum computers as a panacea for all computational problems is misleading, as their superiority over classical computers is limited to specific problem classes.

Quantum computers vs. classical data centres and AI chips

In contrast to traditional computing centres, which work with classical electronics and integrate millions of transistors on silicon chips, quantum computers are based on principles that have no direct analogy in the classical world. Although the latest developments, such as Nvidia’s AI chips, significantly optimise the processing of machine learning and AI tasks, they still follow the rules of classical physics.

Quantum computers, on the other hand, could be able to revolutionise complex simulations and optimisation problems in areas such as materials science, pharmacy and cryptography, which present conventional computers with unsolvable tasks.

Predictions for the future

While the future of quantum computing is still full of technical and theoretical challenges, the potential is revolutionary. With advances in error correction and scalability, the coming years could finally usher in the era where quantum computers solve practical, real-world problems that previously seemed out of reach.

The next few years will be crucial to see whether this technology can fulfil its full promise or whether it will remain a niche product for specific applications.